The DragTonight app leverages Google's AutoML to help drag fans find local shows.

The problem: "Is this drag?"

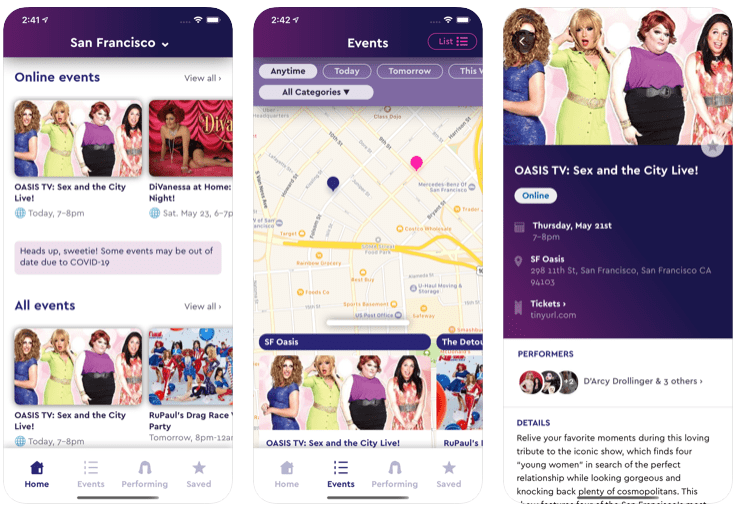

DragTonight is a mobile app that shows you when and where the nearest drag show is happening. It covers the largest 26 metro areas in the United States.

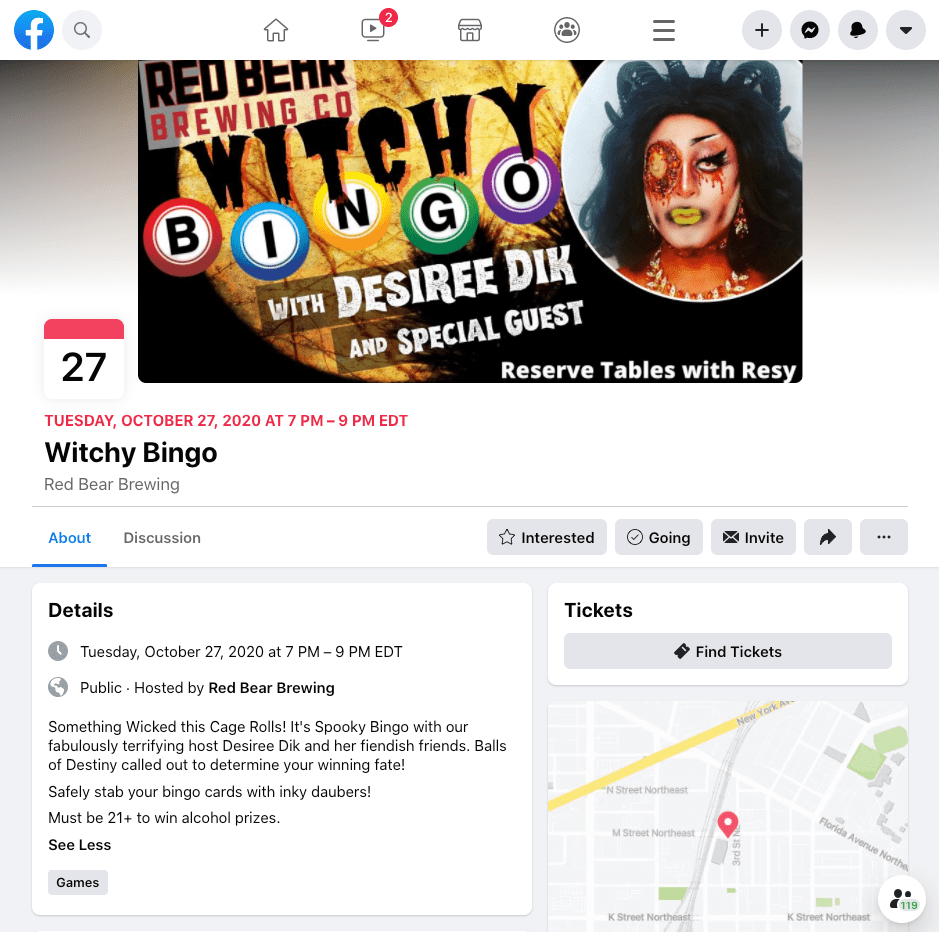

The listing are scraped from Facebook events, but the scraping process inadvertently pulls in lots of events that are not drag shows as well.

For every actual drag show it finds, it also pulls in a karaoke night at a popular gay bar, or a fundraiser event for the local LGBTQ kickball team.

Starting with keywords and heuristics

My first attempt at separating drag from muggle events was to employ a list of phrases and rules. This is pretty obvious - if an event explicitly mentioned "drag show" or "drag queen," for example, we're good to go. But listings mentioning "dragon" and "the drag" are probably going to be false positives.

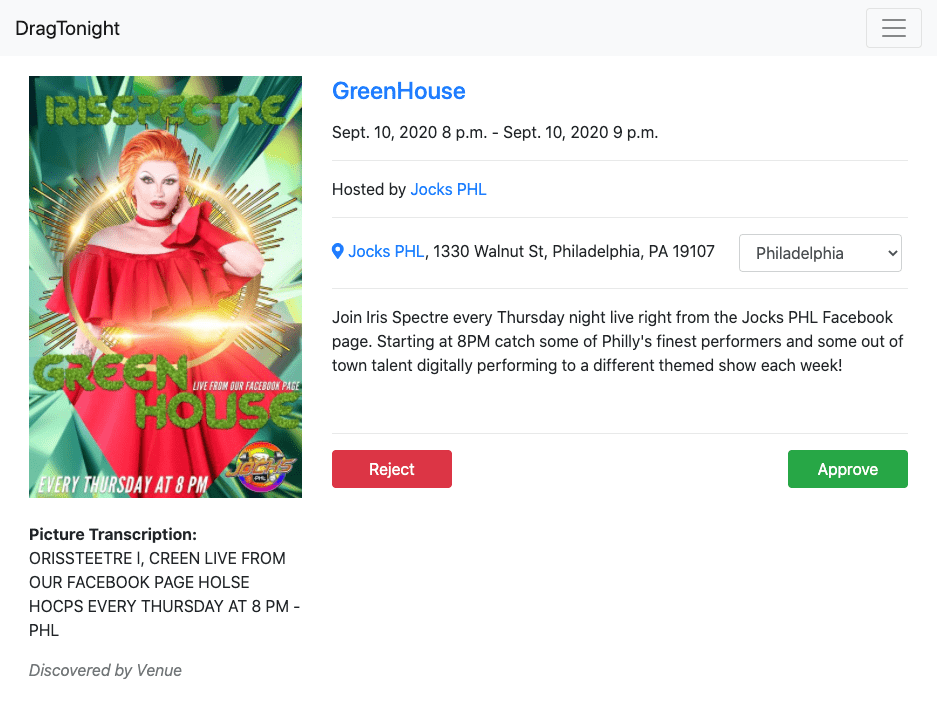

This started with just the event title and description, but eventually expanded to event cover photos. The photos were submitted to Amazon's Rekognition service for optical character recognition, which returned any text from the image.

That's enough, problem solved! Right?

Unfortunately for us, a large number of shows don't actually mention "drag" at all! So while we can fast track some events, others would fall through the cracks without a secondary intervention.

Adding humans in the loop

For events that didn't get auto-approved, I added a manual review process. Every morning I'd spend several minutes clicking through an interface, approving or rejecting the potential drag shows. (Eventually a virtual assistant took this over.)

This could be a time-intensive process, especially before COVID-19 when live shows were in full swing, and bringing on a virtual assistant meant real money out the door every month. Not an ideal situation.

Automating approvals with AI

This type of classification problem is fortunately well-suited for deep learning. Better yet, after a year of tagging shows as drag/not-drag, I had built up a data set of over 13,000 shows for training a model.

Cloud providers like Amazon and Google now offer some amazing AI tools, ranging from out-of-the-box categorization services ("What language is this text?") to the ability to host fully-customized models.

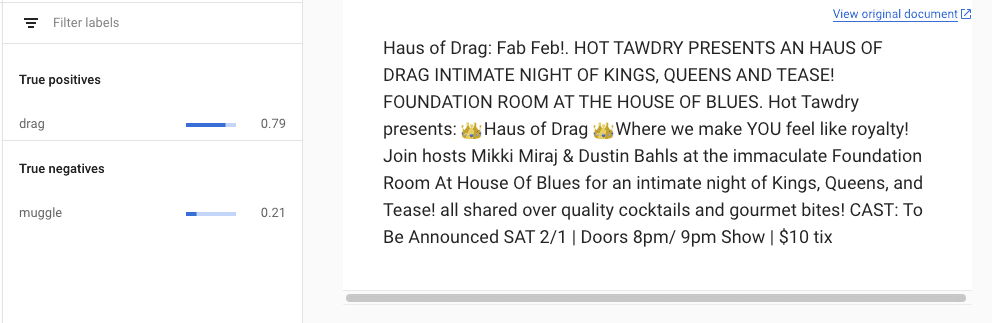

Since Google doesn't offer a drag-or-not API to developers, I went with an intermediate tool called AutoML. You bring your own sample data with labeled outputs, upload it to their environment, then the AutoML service grinds away behind the scenes to find the best model and parameters.

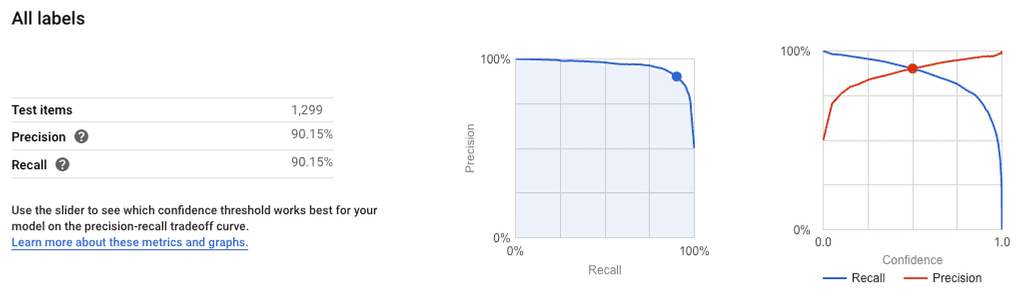

After letting AutoML process the drag show data for a few hours, the result was a machine learning model that was pretty darn good!

It correctly predicted the label more than 90% of the time. And I could adjust the confidence threshold to make it more precise - i.e. only auto-approving shows that were really likely to be drag - though at the expense of sending more actual shows back to the human approver queue.

Could you build a better model yourself? Sure, but at a significant time investment. Maybe once RuPaul invests.

Since launching the AI model, more than 2/3rds of drag shows are now approved without human intervention. This means that shows hit the app faster and reliance on human reviewers has been drastically reduced.

It's a win for humans, machines, and drag performers alike.